Analysis under your control

When you use VoxLogicA, your source code

typically is in the order of 10-30 lines of code (yes, we didn't add

any "K" after the numbers).

The language of VoxLogicA is close to the

domain of imaging, featuring constructs like near

, reachable

,

similar to

.

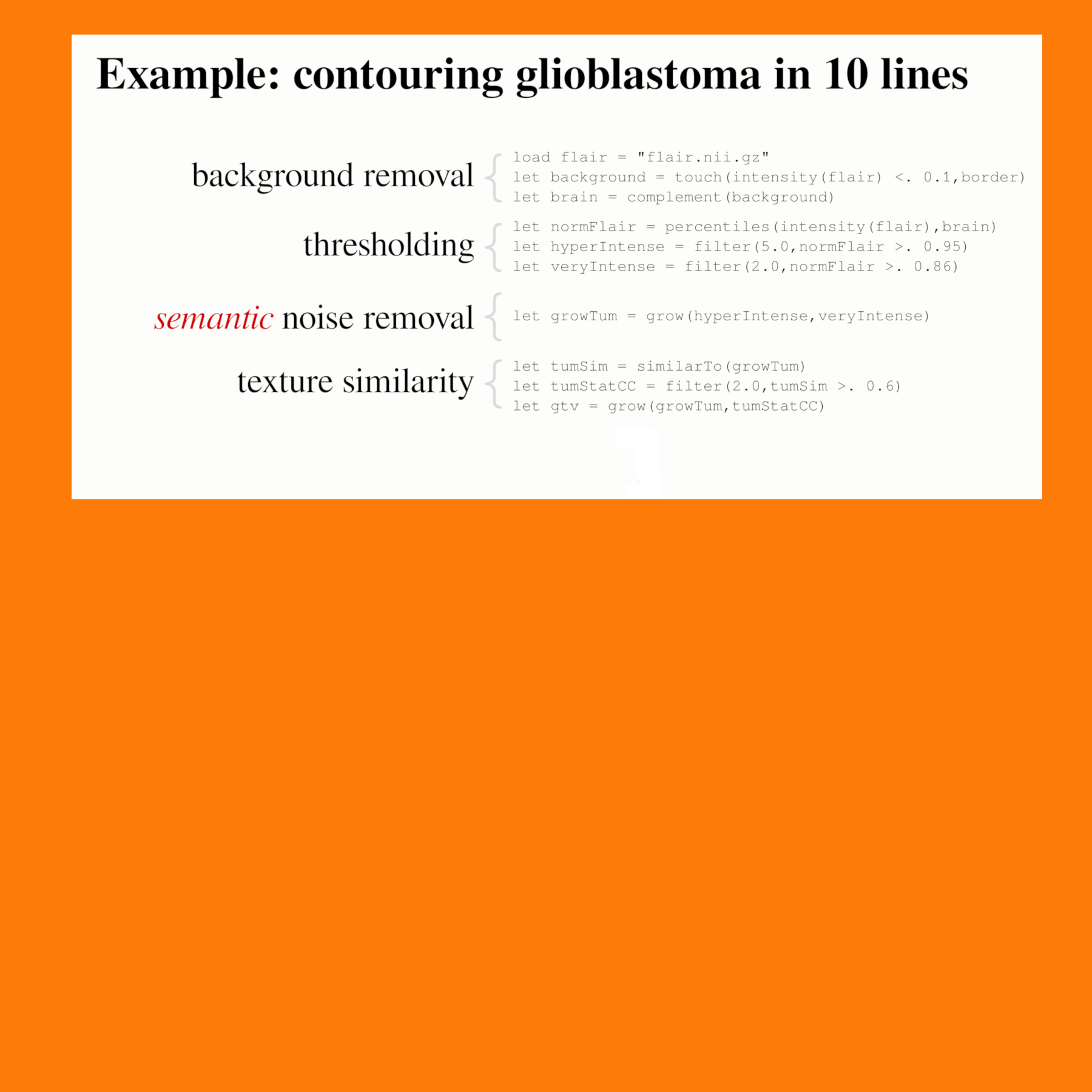

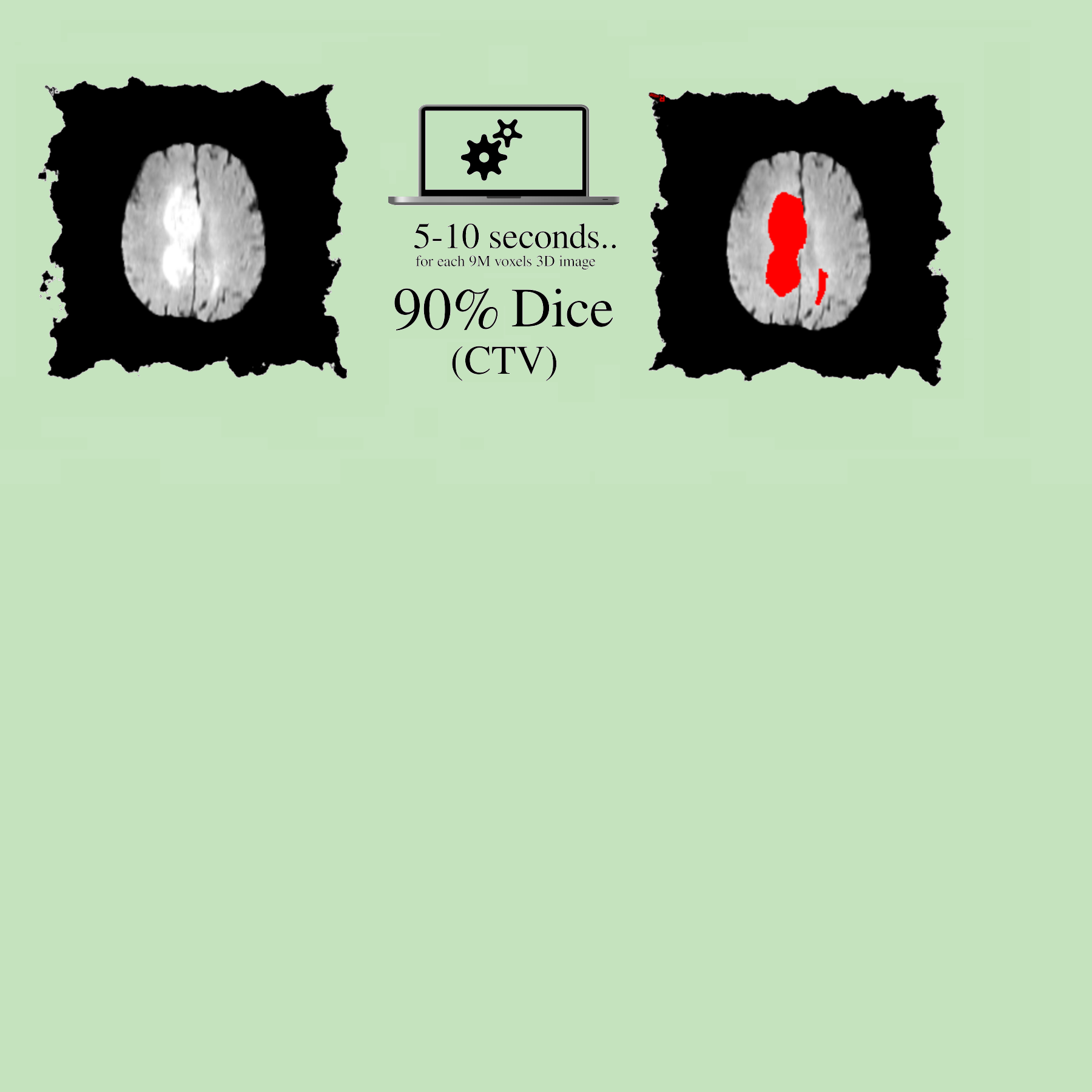

We have tested our approach on the BraTS dataset for glioblastoma segmentation. Our method is described in 10 lines of text and runs in seconds on a standard desktop. Its accuracy is similar to that of humans, and of best-in-class machine learning algorithms.

Got curious? Read our TACAS 2019 publication

Fast and predictable

Inside VoxLogicA, there's a powerful computational engine called a Model Checker. VoxLogicA computes your analysis in parallel using all the available CPU cores. The result is implicitly backed up by a formal mathematical interpretation, guaranteed to yield the same result on Windows, Linux, OSX.

Easy to Use, Easy to Collaborate

It does not take a lot to learn how to use VoxLogicA. Analysis is so short that you can share it to your colleagues, even in a chat window.

Want to learn? Read our tutorial.

Contact options:

VoxLogicA needs a community to grow up!

We need you, users, developers, and domain experts, to shape the future of our technology. Please get in touch if you are interested.